According to a Japanese Proverb;

“The frog in the well knows nothing of the mighty ocean.” Everyday is on opportunity to climb out of the well and peek at the ocean in search of understanding…below flows some peeks from my vista at the edge of the well last week..

Table of Content

- 1 Getting Visual…

- 2 If You Read One Thing Today – Make Sure it is This…

- 3 Consequential Thinking about Consequential Matters…

- 4 Big Ideas…

- 5 Big thinking…

- 6 Patience…

1 Getting Visual…

What makes up the world around us? Go find out the details of the biomass in this interesting interactive: https://biocubes.net/

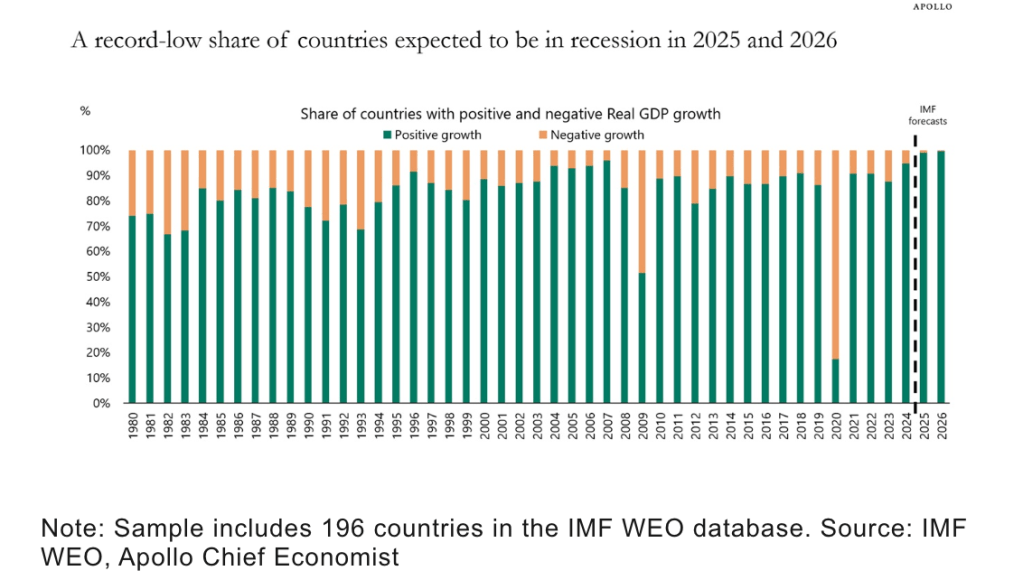

Wild World – Stable (forecasted) Economy – The IMF produces forecasts for 196 countries in the world, and their latest forecast shows that a record-low share of countries are expected to be in recession in 2025 and 2026.

Make your country great again – the rise of domestic agendas – In the political realm, I see a reversion to the mean. After decades of globalism and cosmopolitan thinking, many countries—from the US to Argentina, Canada to India—are pivoting inward, focusing on nationalism or populist policies. That isn’t inherently surprising; if you zoom out, these pendulum swings happen – although we’re at the highest level since 1930s.

Inflections Points

“When I look back on our recent history—from around 2014 to today—I see an era that future historians will call an inflection point. Much as the 1920s and 1930s fundamentally differed from the world of the late 1800s, we’re going through a similarly significant transition now. We have game-changing technologies and volatile geopolitics. In my view, this period will be studied as the moment when economic and political models were reshaped. I wrote a book about this in 2022; if you’re new to my work you can pick it up here. I draw heavily on the work of economist Carlota Perez who outlined how the changing underlying technological paradigm brings with it financial speculation, bubbles and wealth accumulation. It requires an institutional recomposition which fundamentally heralds a golden age.Things are breaking apart, but also recombining in new ways. Yes, it is about tech but it’s also about deeper social, cultural and economic realignments.” – Azeem Azhar

The Power of Innovation

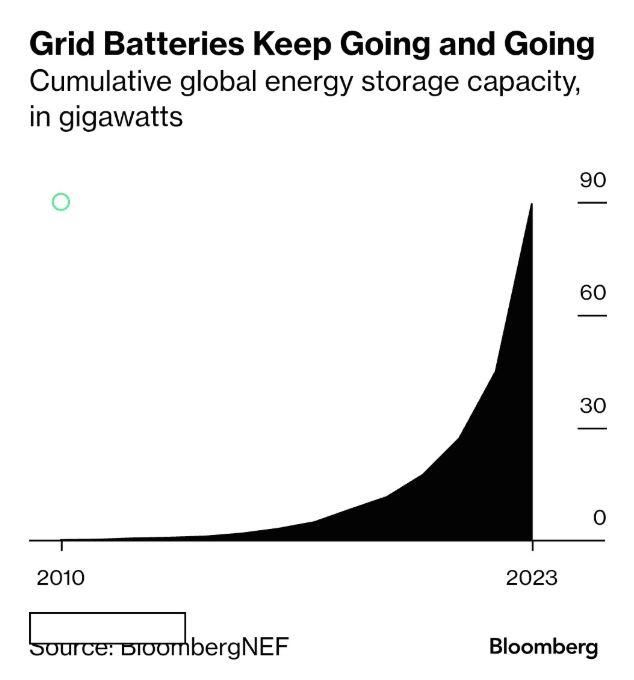

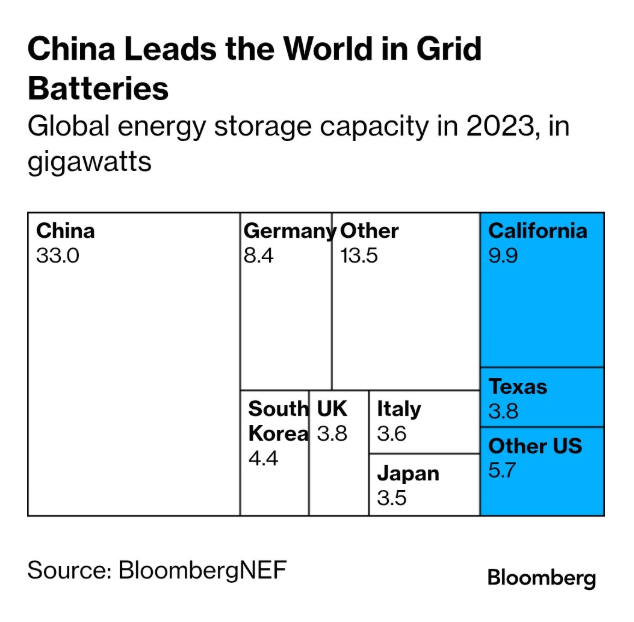

Global prices for large-scale energy storage systems have plunged 73% since 2017, according to BNEF. China, which requires batteries to be installed at new solar or wind farms, overtook the US as the world’s biggest energy storage market in 2023 and was expected to add 36 gigawatts of batteries in 2024, equivalent to the output of 36 nuclear reactors. The US, in contrast, was on track to add almost 13GW in 2024, according to BNEF research, with an additional 14GW coming in 2025.

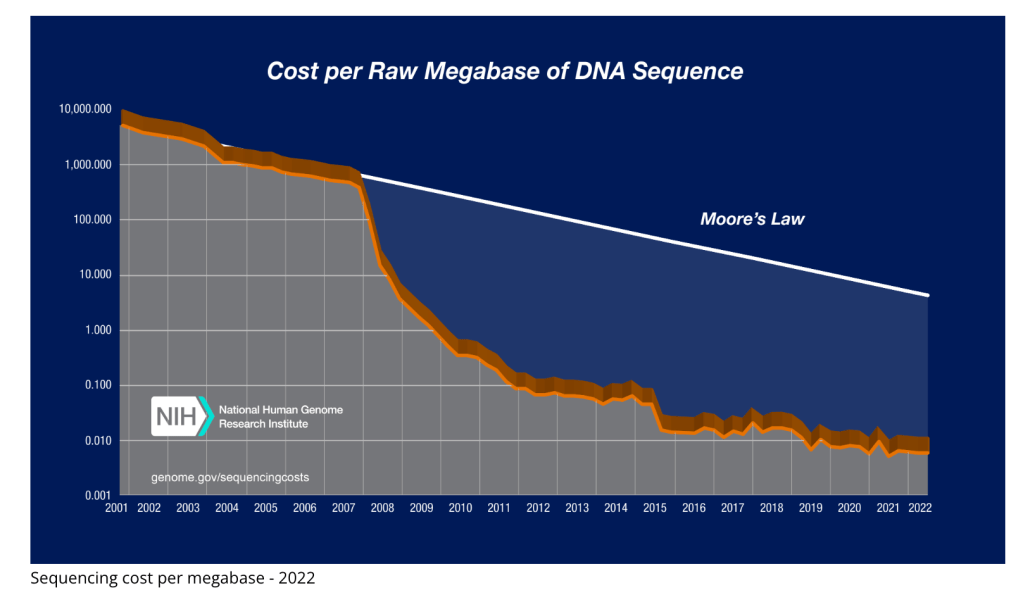

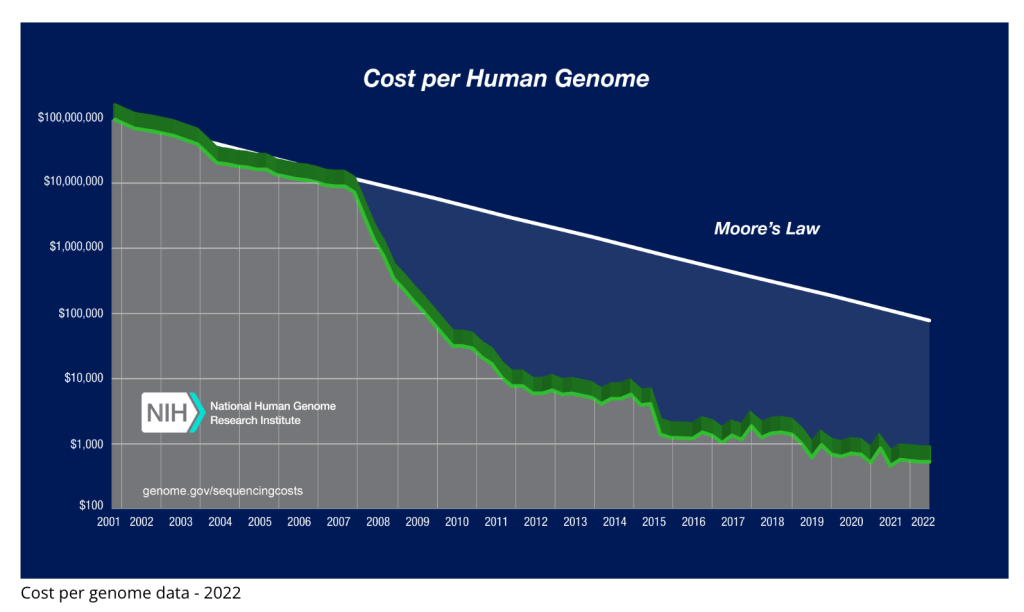

Exponential Power – Human ingenuity drives progress. A look at DNA sequencing costs is a powerful example…

Learn more here: https://www.genome.gov/about-genomics/fact-sheets/DNA-Sequencing-Costs-Data

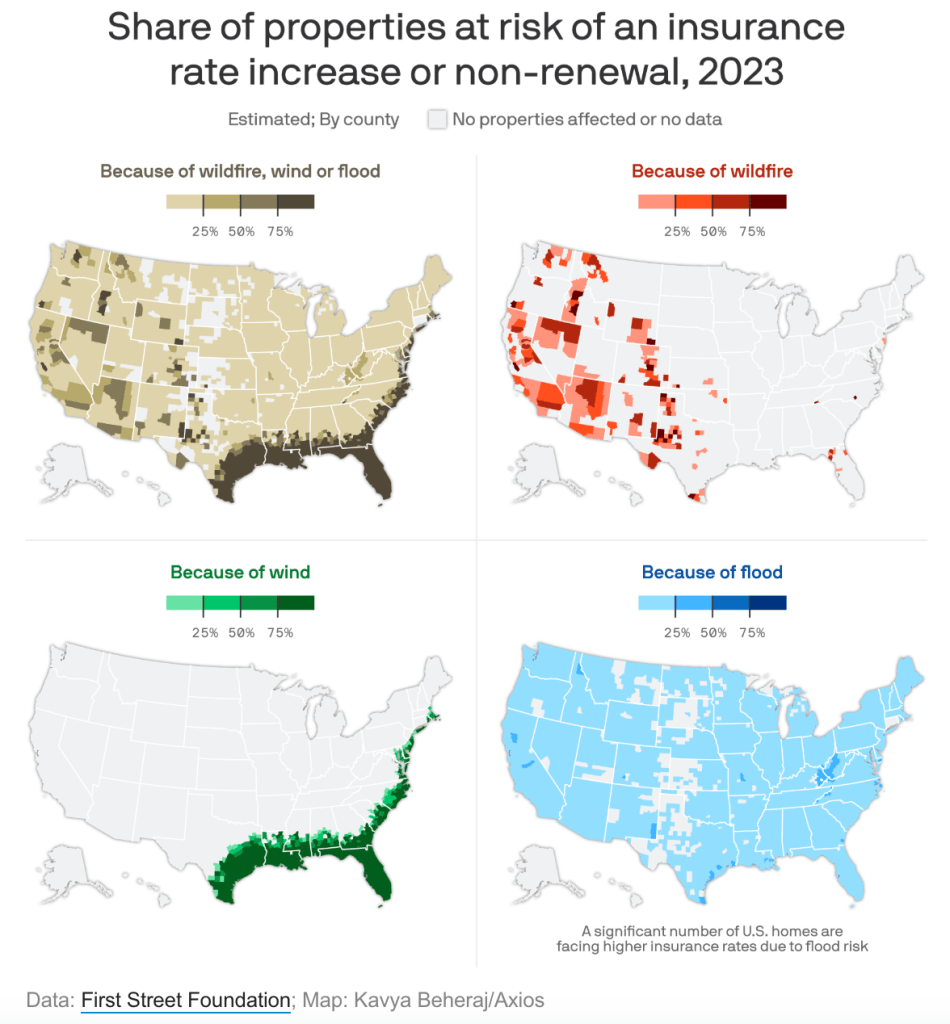

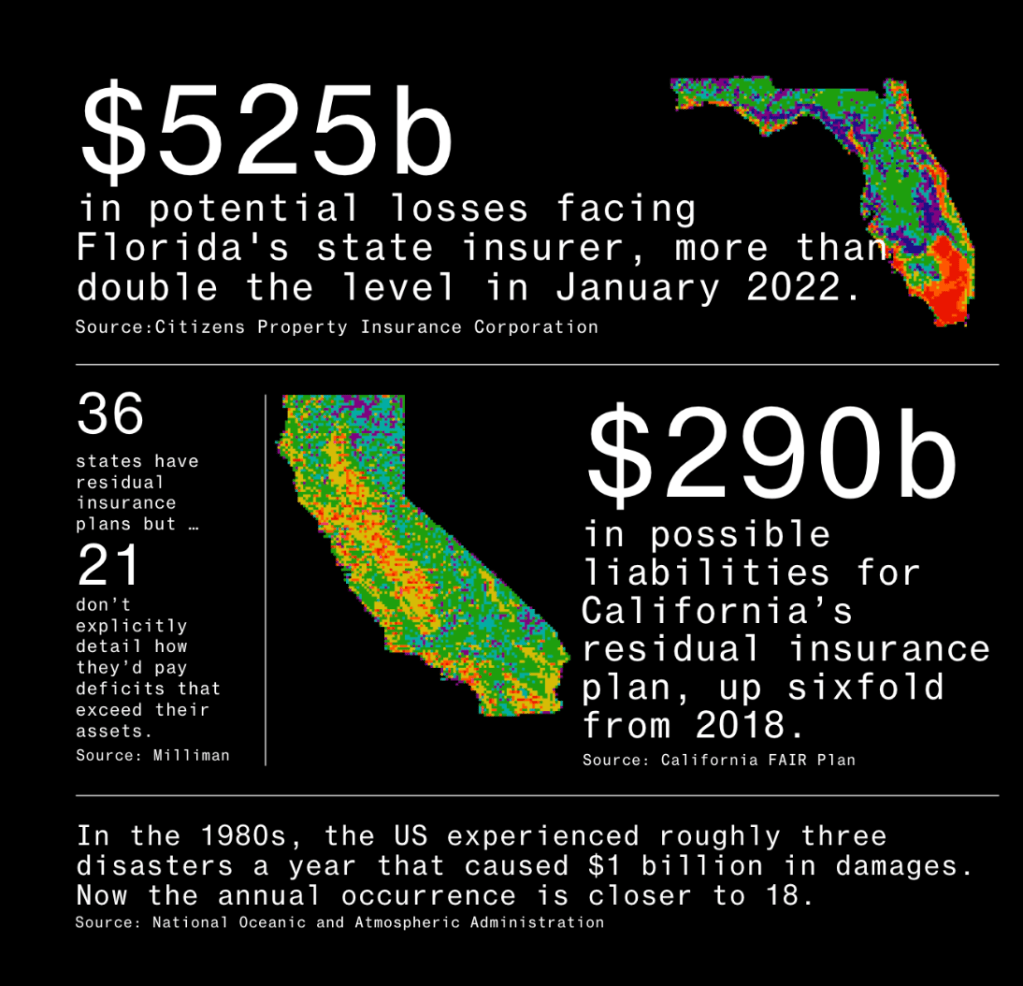

Disaster – As private insurance pulls out of insuring homes in the most disaster-prone American states, public last resort backstops are absorbing the risks, so far to the tune of $ 1 trillion.According to a 2018 study by the University of Cambridge and Munich Re, if a Category 5 hurricane hit Miami and the Florida coast, it could cause a staggering $1.35 trillion in damages, more than $60,000 for every person in the state. … Fire damage from 2017 and 2018 wiped out more than twice the previous 25 years’s worth of underwriting profits for the California insurance market.

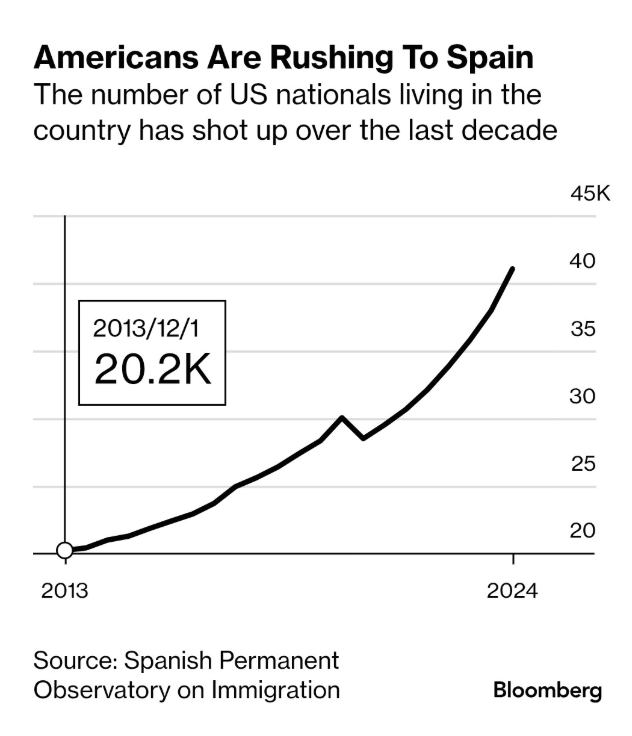

On the Move – There were roughly 41,000 Americans living in Spain in June, a 39% increase from three years ago and double the number from 2014. Nearly 15,000 golden visas were issued in Spain since the program’s launch in 2013, according to the most recent annual government figures. Spain recently announced it would officially end its golden visa program on April 3, roughly a year after the change was initially proposed. But that hasn’t stopped Americans from seeking ways to gain residency in the country. And there’s been increased interest in the aftermath of the contentious presidential election. “It’s been non-stop requests,” said Matt Anderson, an American who works as a real estate agent in Mallorca. He said there was a 12% increase in US buyers on the Spanish island over the last year and cited the quality of international schools and warm weather as key reasons driving many Americans to relocate.

2 If You Read One Thing Today – Make Sure it is This…

Brian Klaas – author of the interesting book; Fluke – Chance, Chaos and why everything we do matters – shares the essays he most enjoyed writing in 2024.

Plenty of interesting perspectives and his short video; ‘The Edge of Chaos’ at the intro is a good starting point – it’s something to think about…go do it here:

https://www.forkingpaths.co/p/the-highlights-of-2024

Some Takeaways

- 1 We are different from all other humans in history

Depending on which scientist you ask, Homo sapiens emerged between 200,000 and 315,000 years ago. Let’s split the difference, go with 257,000, and say that there have been about 9,500 generations of humans (the average generation throughout human history lasts 26.9 years).

Our way of life was dominated by hunting and gathering for 9,100 of those 9,500 generations—96 percent of the existence of our species.

Then, about 10,000 years ago, agrarian societies formed. Farming replaced hunting and gathering. (It was disastrous for our health). In the 18th century, society shifted again, toward industrialization. And now, in the last thirty years or so, we’ve ushered in another shift, with computerization, the internet, and artificial intelligence, a society defined by interconnected information and an unprecedented flow of ideas.

If the history of humanity were condensed into a single 24-hour day, this is roughly what it would look like:

- The Hunter-Gatherer Age—23 hours and 3 minutes

- The Agrarian Age—55 minutes and 32 seconds

- The Industrial Age—1 minute and 17 seconds

- The Information Age—11 seconds

More than half of the world’s population is under the age of 30, meaning that more than half of us have only lived in those 11 seconds—an era that is, without question, the weirdest period in human history.”

Consider, for example, this extraordinary fact, conveyed by the Dutch sociologist Ruut Veenhoven:

“the average citizen lives more comfortably now than kings did a few centuries ago.”

We, not them, are the weird ones.

Read in full here: https://www.forkingpaths.co/p/we-are-different-from-all-other-humans

- 2 The Evolution of Stupidity (and Octopus Intelligence)

Sadly, so much of our discourse around intelligence and stupidity gets hijacked by pseudoscience, racism, and debates over whether arbitrary measurements like IQ are valid. We ignore more interesting questions around intelligence and stupidity that we can learn not from ourselves, but from other species. In particular:

- What, specifically, does it mean to be “intelligent?” What do we mean when we say that humans and chimps and dolphins and crows are intelligent?

- Why did some species—including us—become smart, while others didn’t?

- Why is stupidity still so widespread in humans?

Pondering these questions requires going on a bit of a wild ride, exploring fascinating animal worlds from chimpanzees to cephalopods, as we begin to understand our own cleverness—and stupidity—through the eyes of an octopus, the closest thing to alien intelligence on Earth.

Read it here in full: https://www.forkingpaths.co/p/the-evolution-of-stupidity-and-octopus

- 3 De-Extinction and the Resurrection of the Woolly Mammoth

In 2028, if all goes according to plan, a six foot five narcoleptic scientist with a bushy white beard will resurrect the first living woolly mammoth in 4,000 years. And that mammoth—if the science works—may soon be lumbering, in all its hairy glory, across the frozen plains of North Dakota.

This is the story of the science, the ethics, and the risks and rewards of the emerging field of de-extinction—the revival of species that no longer exist.

Humans may soon be able to bring species back from the dead. But should we?

Read it here in full: https://www.forkingpaths.co/p/de-extinction-and-the-resurrection

3 Consequential Thinking about Consequential Matters…

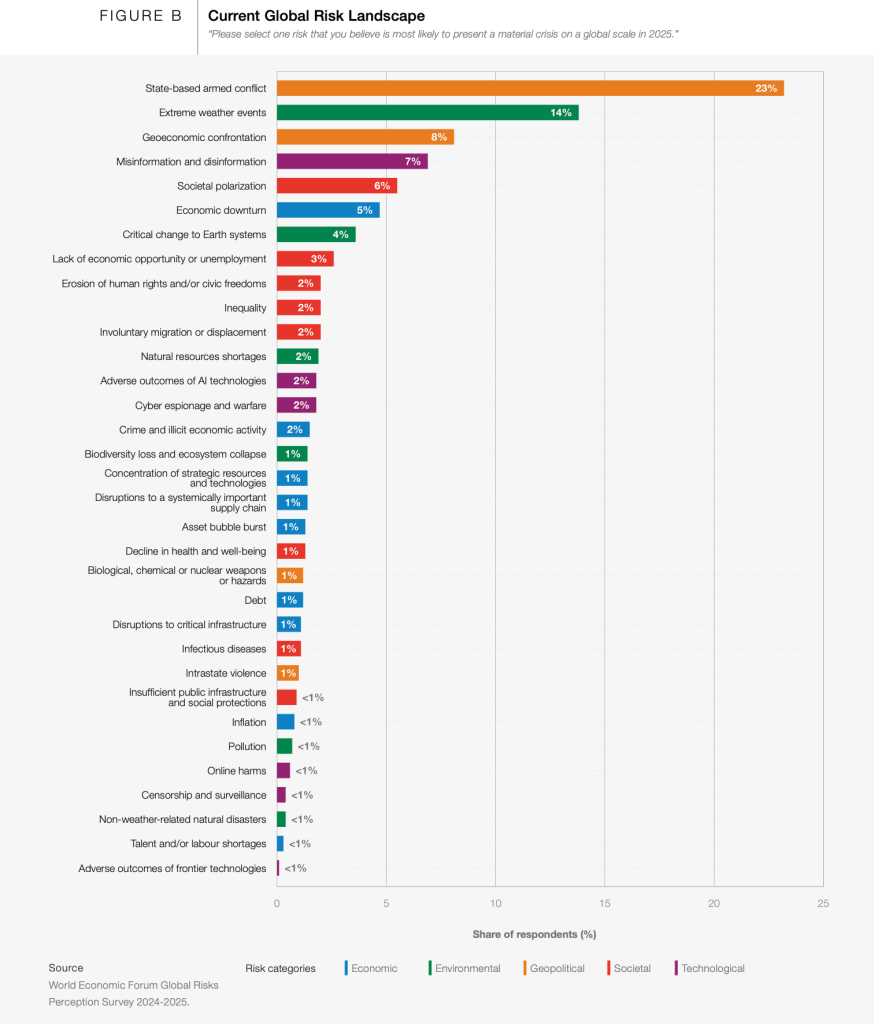

WEF has dropped 104 pages on the Global Risks for ‘Davos Man’ to worry about for 2025 – as much as it has been maligned (often rightly so) the WEF does provide good insights and I like their exercise of thinking out 2 years and then 10 years. There is some Consequential Thinking about Consequential Matters in this report – go take a look: https://reports.weforum.org/docs/WEF_Global_Risks_Report_2025.pdf?utm_

Some Takeaways

“The multi-decade structural forces highlighted in last year’s Global Risks Report – technological acceleration, geostrategic shifts, climate change and demographic bifurcation – and the interactions they have with each other have continued their march onwards. The ensuing risks are becoming more complex and urgent, and accentuating a paradigm shift in the world order characterized by greater instability, polarizing narratives, eroding trust and insecurity. Moreover, this is occurring against a background where today’s governance frameworks seem ill-equipped for addressing both known and emergent global risks or countering the fragility that those risks generate.”

“Concerns about state-based armed conflict and geoeconomic confrontation have on average remained relatively high in the ranks over the last 20 years, with some variability. Today, geopolitical risk – and specifically the perception that conflicts could worsen or spread – tops the list of immediate-term concerns.”

“An estimated two-thirds of the world’s population – 5.5 billion people – is online and over five billion people use social media. The increasing ubiquity of sensors, CCTV cameras and biometric scanning, among other tools, is further adding to the digital footprint of the average citizen. In parallel, the world’s computing power is increasing rapidly.48 This is enabling fast-improving AI and GenAI models to analyse unstructured data more quickly and is reducing the cost to produce content. With Societal polarization ranking #4 in the GRPS two-year ranking, the vulnerabilities associated with citizens’ online activities look set to continue deepening hand in hand with societal and political divisions. Taken as a whole, these developments threaten to fundamentally undermine individuals’ trust in information and institutions.

Like last year, Misinformation and disinformation tops this year’s GRPS two-year ranking. The amount of false or misleading content to which societies are exposed continues to rise, as does the difficulty that citizens, companies and governments face in distinguishing it from true information. The interplay of rising Misinformation and disinformation with political and Societal polarization creates greater scope for algorithmic bias. If human, institutional and societal biases are not addressed, and/or best practices in modelling are neglected, the conditions will be ripe for algorithmic bias to become more prevalent. Such bias, whether inherent in data, models or their creators, can lead to unjust outcomes.”

Super-ageing societies

Countries are termed “super-ageing” or “super- aged” when over 20% of their populations are over 65 years old.

Several countries have already exceeded that mark, led by Japan and including some countries in Europe. Many more countries across Europe and Eastern Asia in particular are projected do so by 2035. Globally, the number of people aged 65 and older is expected to increase by 36%, from 857 million in 2025 to 1.2 billion in 2035.

By 2035, populations in super-ageing societies could be experiencing a set of interconnected and cascading risks that underscore the GRPS finding that the severity – albeit not the ranking – of the risk of Insufficient public infrastructure and social protections is expected to rise from the two-year to the 10-year time horizon. An ongoing concern is that government funding for public infrastructure and social protections gets diverted during short-term crises.

Some super-ageing societies could be facing crises in their state pensions systems as well as in employer and private pensions, leading to more financial insecurity in old age and exacerbated pressure on the labour force, which includes a growing number of unpaid caregivers. Indeed, super-ageing societies by 2035 are likely to face labour shortages.

The long-term care sector will be especially affected by labour shortage. Care occupations are expected to see significant demand growth globally by 2030. Care systems – health care and social care – in super-ageing societies are already under clear and immediate strain. They will struggle to serve a fast-growing population over 60 years of age that has additional care needs while recruiting and retaining enough care workers. Care systems are, in great part, funded by governments and account for about 381 million jobs globally – 11.5% of total employment. The accumulation of debt and competing spending needs on, for example, security and defense are likely to constrain the reach and sustainability of public expenditure

on care systems over the next decade. Without increased public or blended investment, care demand will continue to be unmet.

Economies already experiencing this challenge are resorting to stop-gap measures, including attracting migrant care workers from other economies. But if this turns into a talent drain from countries with more youthful societies, those countries may then struggle to reap the benefits of their demographic dividend and will, several decades from now, run into super-ageing society challenges of their own.

There will be no easy solutions to this problem set, given the sustained strength to 2035 of the two underlying trends generating higher average dependency ratios, not only across super-ageing societies, but at the global level: declining fertility rates and rising life expectancy, though not necessarily in better health.

4 Big Ideas…

Upon the second birthday of ChatGPT, Sam Altman took to his Blog to post some ‘reflections’ – they hold a few interesting ideas and insights to ponder on GenAI, Tech and building a startup company – Go do it here: https://blog.samaltman.com/reflections

Some Takeaways

In 2022, OpenAI was a quiet research lab working on something temporarily called “Chat With GPT-3.5”. (We are much better at research than we are at naming things.) We had been watching people use the playground feature of our API and knew that developers were really enjoying talking to the model. We thought building a demo around that experience would show people something important about the future and help us make our models better and safer.

We ended up mercifully calling it ChatGPT instead, and launched it on November 30th of 2022.

We always knew, abstractly, that at some point we would hit a tipping point and the AI revolution would get kicked off. But we didn’t know what the moment would be. To our surprise, it turned out to be this.

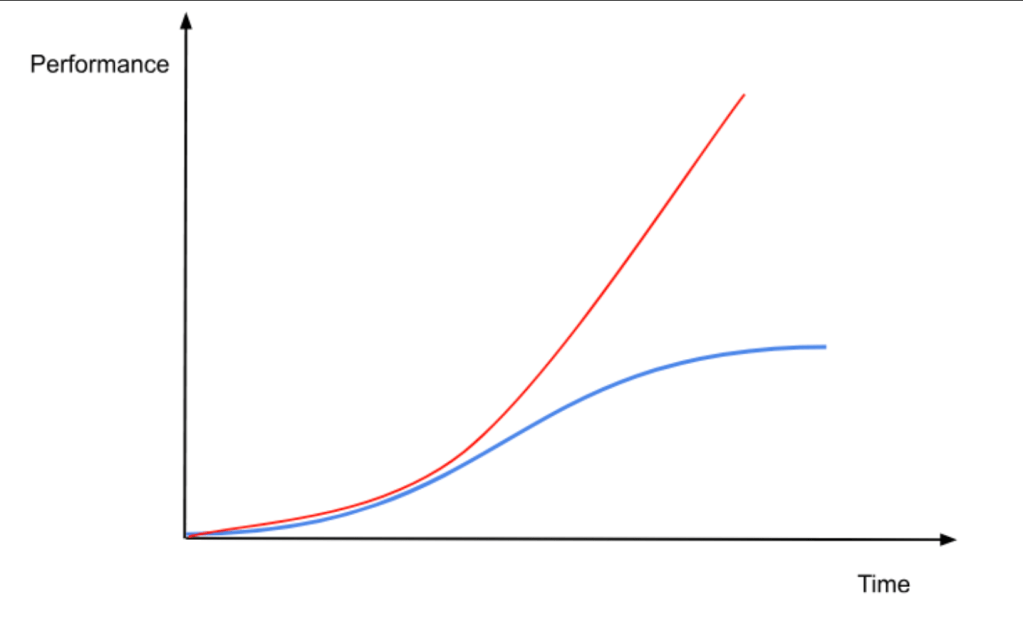

The launch of ChatGPT kicked off a growth curve like nothing we have ever seen—in our company, our industry, and the world broadly. We are finally seeing some of the massive upside we have always hoped for from AI, and we can see how much more will come soon.

The road hasn’t been smooth and the right choices haven’t been obvious.

In the last two years, we had to build an entire company, almost from scratch, around this new technology. There is no way to train people for this except by doing it, and when the technology category is completely new, there is no one at all who can tell you exactly how it should be done.

Building up a company at such high velocity with so little training is a messy process. It’s often two steps forward, one step back (and sometimes, one step forward and two steps back). Mistakes get corrected as you go along, but there aren’t really any handbooks or guideposts when you’re doing original work. Moving at speed in uncharted waters is an incredible experience, but it is also immensely stressful for all the players. Conflicts and misunderstanding abound.

Nine years ago, we really had no idea what we were eventually going to become; even now, we only sort of know. AI development has taken many twists and turns and we expect more in the future.

Some of the twists have been joyful; some have been hard. It’s been fun watching a steady stream of research miracles occur, and a lot of naysayers have become true believers. We’ve also seen some colleagues split off and become competitors. Teams tend to turn over as they scale, and OpenAI scales really fast. I think some of this is unavoidable—startups usually see a lot of turnover at each new major level of scale, and at OpenAI numbers go up by orders of magnitude every few months. The last two years have been like a decade at a normal company. When any company grows and evolves so fast, interests naturally diverge.

“Our vision won’t change; our tactics will continue to evolve.”

We are now confident we know how to build AGI as we have traditionally understood it. We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies. We continue to believe that iteratively putting great tools in the hands of people leads to great, broadly-distributed outcomes.

We are beginning to turn our aim beyond that, to superintelligence in the true sense of the word. We love our current products, but we are here for the glorious future. With superintelligence, we can do anything else. Superintelligent tools could massively accelerate scientific discovery and innovation well beyond what we are capable of doing on our own, and in turn massively increase abundance and prosperity.

This sounds like science fiction right now, and somewhat crazy to even talk about it. That’s alright—we’ve been there before and we’re OK with being there again. We’re pretty confident that in the next few years, everyone will see what we see, and that the need to act with great care, while still maximizing broad benefit and empowerment, is so important.

5 Big thinking…

Explore Inversion and The Power of Avoiding Stupidity in this excellent short exploration from the good people at the FS Blog: https://fs.blog/inversion/

Some Takeaways

“It is not enough to think about difficult problems one way. You need to think about them forwards and backward. Inversion often forces you to uncover hidden beliefs about the problem you are trying to solve.”

“Many problems can’t be solved forward.”

“Say you want to improve innovation in your organization. Thinking forward, you’d think about all of the things you could do to foster innovation. If you look at the problem by inversion, however, you’d think about all the things you could do that would discourage innovation. Ideally, you’d avoid those things. Sounds simple right? I bet your organization does some of those ‘stupid’ things today.

Another example, rather than think about what makes a good life, you can think about what prescriptions would ensure misery.”

“Avoiding stupidity is easier than seeking brilliance.”

“While both thinking forward and thinking backward result in some action, you can think of them as additive vs. subtractive.

Despite our best intentions, thinking forward increases the odds that you’ll cause harm (iatrogenics). Thinking backward, call it subtractive avoidance or inversion, is less likely to cause harm.

Inverting the problem won’t always solve it, but it will help you avoid trouble. You can think of it as the avoiding stupidity filter. It’s not sexy but it’s a very easy way to improve.”

“Spend less time trying to be brilliant and more time trying to avoid obvious stupidity.”

6 Patience…

Have a Great Weekend when you get to that stage…

Sune Hojgaard Sorensen